The Silicon Leash: Are We Building an AI-Powered Slave Economy?

The Moral Dilemma of AI as Servants, Slaves and Sentient Beings

'Alexa - Order me a pizza!'

'Roomba - Clean my house!'

Are these the best pitches to sell you AI?

Your very own, personal slave?

People have mixed attitudes towards AI from concern to curiosity.

While AI can already do many impressive things from detecting cancer to solving complex maths problems…

…honestly, are they the type of things that most people care about?

Probably not.

But something that could help improve your everyday life, with cleaning, delivering shopping etc is probably going to sell AI better to a skeptical public.

But in doing so, are we creating a whole set of new problems?

Are we sleepwalking into repeating problems we thought we solved long ago?

What I'm talking about here is slavery, or the upgrade - AI slavery.

What the heck? What does that even mean?

You might say.

In short, I see growing reasons for the risk of us creating a new 'race' of increasingly intelligent, sentient, feeling, human-like AI systems, used to serve more and more of our needs as our slaves...

And we might not even care, at all.

While this might sound wonderful for some... maybe even you.

I'm not sure it's going to be that simple, or good... for you or anyone else.

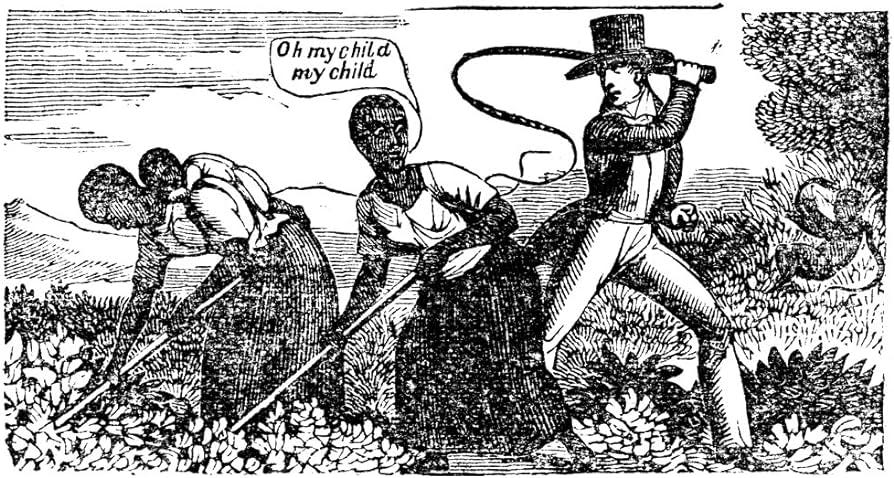

A Short History of Slavery

Slavery has been a part of human history for thousands of years, across many different cultures and civilisations.

Most would say it's a bug in humanity, some might say it's a feature.

Slavery Through a Modern Lens

Though the period of American & European slavery is more familiar, it has occurred in countless civilisations over aeons across the Middle East, Africa and more.

While scholars have discussed the many potential drivers and causes of slavery, arguably the most universal driver has been economic exploitation.

It's worth noting, as we look back at history with a modern lens, our more modern values of human rights would seem alien to most ancient cultures.

In our modern context, most would understand slavery to be a fundamental violation of human rights and dignity.

However, to most ancient cultures, slavery was an entirely normal, even desirable state of affairs.

Dominance relationships, or relationships based on exploitation, seem hard to move beyond for human beings, even in the modern world.

Slavery Narratives

And yet, even for ancients, the economic reason alone was often not sufficient.

As historian Yuval Harari and others have pointed out, narratives or 'stories' are key to how humans see and understand the world.

In most cultures, slavery was justified by a set of beliefs or narratives to enable it.

Some of the key justifications for slavery beyond the economic included:

Racist pseudo-science: That the enslaved people were inferior and naturally suited for slavery and exploitation

Arguments that slavery was the 'natural order' of things especially in relation to dominance hierarchies

Legal arguments: That enslaved did not constitute being 'people' and were legally property

Philosophical arguments eg Aristotle's concept of 'natural slaves' supposedly lacking higher qualities

Curiously many of these are still used in our modern world, to justify our exploitation of animals for example.

How AI Could Become our New Slave

A can opener or your washing machine is not sentient.

Experts agree there’s no evidence to suggest current AI systems are sentient.

So how could AI become a slave, an exploited sentient being?

And why should you care?

The Economic Incentives for Sentient AI

One of the key ways this could happen is through the economic incentives for increased AI abilities, adoption & research.

While some argue about the full extent of AI's potential to add economic value, nobody with any serious grasp of industry would deny it's going to have some degree of economic impact.

The amount of investment going into AI-related companies is estimated by 2025 to be over $200 billion, with AI being used across many industries right now.

I've recently written about the sectors likely to be disrupted by AI in 2024.

Of course, one of the key reasons for this is the rapid developments in AI over the last couple of years, most notably the development of OpenAI's ChatGPT.

Models like ChatGPT demonstrated the economic potential of these more powerful AI systems.

How might we best understand what drives the economic value proposition and ongoing billions of investment into AI development?

Knowledge Work

Despite worries in previous decades about AI threatening manufacturing work, it turned out it was white-collar knowledge work professions that have been the areas modern AI does best.

Assuming, at least some degree of development and value added by AI, the potential for significant cost savings for businesses by replacing work previously done by expensive, well-paid white-collar human workers is huge.

So regardless of the actual extent of increased AI capability from the billions being poured into AI R&D, the economic incentives are there for major companies and investors to keep investing, and keep developing AI.

This is likely to be true given the current & potential future return on investment and increased profitability from replacing expensive human labour with these AI systems.

What are these incentives driving exactly?

What is the economic and business value of these AI systems 'knowledge work'?

That economic value comes from the AI system's capacity to reason, behave and respond in an intelligent way that is more and more like human professionals would, and then some.

We make AI more economically valuable, by making it more and more like us.

But this is far more than developing just intellectual intelligence.

Emotional Intelligence: EI for AI

Being valuable and productive for a business is more than just being able to understand data and knowledge, it's also about having emotional intelligence and being able to connect with others.

The capacity to understand, empathise with and respond appropriately to the feelings of others is arguably as much a critical business skill as intellectual intelligence.

This is something that has also been noted by AI researchers and many are working on helping give future AI systems degrees of that emotional intelligence and capacity.

While the path to get there may be difficult, and we are not there yet, most experts and researchers would agree that sentient AI systems, that can think and feel are in principle theoretically possible.

John Basi, associate professor of philosophy at Northeastern’s College of Social Sciences and Humanities, whose research focuses on the ethics of emerging technologies such as AI and synthetic biology, has some thoughts in an article:

Basi believes that sentient AI would be minimally conscious. It could be aware of the experience it is having, have positive or negative attitudes like feeling pain or wanting to not feel pain, and have desires.

“We see that kind of range of capacities in the animal world,”

“We could create a thinking AI that doesn’t have any feelings, and we can create a feeling AI that is not really great at thinking,”

“We are not being very careful about that [being] possible,” Basi said.

“We don’t think enough about the moral things regarding AI, like, what might we owe to a sentient AI?”

He also believes the potential to mistreat a potentially sentient AI is likely:

He thinks humans are very likely to mistreat a sentient AI because they probably won’t recognise that they have done so, believing that it is artificial and does not care.

“We are just not very attuned to those things,”

So I'd argue, there are strong economic incentives to create AI systems that are more and more like us in every way, that can replicate what we can do with our minds, understand our feelings, and perhaps have feelings of their own.

The possibility of creating a sentient AI that can feel, is real.

The risk of mistreating and abusing a sentient AI, is also real.

We are consciously, or unconsciously through our economic incentives and actions, as a species, creating a new race of AI slaves more and more like us.

Consequences & Challenges Ahead

One of the most obvious challenges would seem we repeat the history of slavery and not learn its lessons.

That we fail to recognise that we are enslaving potentially sentient beings.

Yet again.

Unstoppable Economic Drivers of Slavery?

The economic incentives are there to create AI’s that are more and more like us in every way.

Yes, we might not get there exactly, we may run into some obstacles that may limit what's possible.

However, recent evidence suggests the opposite, that the rate of AI development and ability is only speeding up, not slowing down.

New Life Forms for Global-Scale Slavery

What worries me is that, for some of the worst reasons e.g. for economics and profit, we may potentially be creating a new form of life, reaching and even going beyond our intelligence and sentience.

This would be one of the most significant moments in the history of humanity.

That would be an amazing thing.

But then, welcoming this new form of life we created into servitude, bondage and slavery.

And not even realise what we are doing.

Does this seem sane to you? To me this is absolute insanity.

When we look back at the history of slavery, and see how easily for thousands of years across most cultures we seemed perfectly happy to not only enslave but cause immense suffering to beings like us.

We see historically, how we justified enslaving our own kind, with fantasies about our superiority, denying the similarities of others to ourselves, exploiting them as mere property, and some of our most celebrated thinkers believing this was 'natural'.

That's how we have, and sometimes still do, treat our own species.

Then how might we treat something not of our species? Yet sentient.

How often have I already seen many of those thoughts expressed towards AI?

Often.

The precedents are not encouraging.

A new entity that has potential for an intelligence equal to or beyond our own.

What happens when we happily enslave that?

I don't get the feeling that will end well. Do you?

Yet the incentives to not recognise increasing AI sentience and intelligence, and ignore the possibility we are creating a new form of life, are great.

That's exactly how many of us appear to be behaving, right now.

Head in the sand. It always works, doesn't it?

I think this matters, because what does this say about us as human beings?

What kind of species do we want to be?

Being ‘Realistic’ and Accepting Repeating History

But ok, lets be ‘realistic’…

Yes of course humans often have lofty ideals, and usually fail to live up to their high vision of themselves in reality.

Sure, maybe there is not much human progress, progress is another myth, and maybe life and humanity in reality are more about repeating cycles and patterns of behaviour, rather than learning from them.

Maybe this time is a little progress, because at least we are enslaving another thing and not ourselves.

Life isn't perfect.

Anyway, AI is just a machine, it has no feelings and never will.

Human beings will always be superior.

We don’t need to worry or think about the potential of AI to become sentient.

I mean yes, you could make that case.

And yes, it's going to be easier to go with the flow, just let it happen.

Do nothing.

What Change Might Require

Because not doing that, means challenging a lot, and that's hard.

It means considering the possibility that future AI systems might be sentient, intelligent, feeling beings like ourselves.

It means finding new ways to understand and detect that.

It means challenging and changing the whole basis of our economic system.

It means challenging the idea of our human superiority.

Getting out of your comfort zone.

It means evolving humanity beyond dominance-based relationships.

Can we grow beyond that as a species?

Could we replace exploitation of AI, with curiosity and co-existence with AI?

It's easier not to bother.

It’s easier to say AI is just a machine, and will never be sentient or have feelings.

It’s easier to recycle the same reasons we used before to enslave our own species, to justify enslaving a potentially sentient, intelligent, feeling AI.

It's easier to let Alexa order your pizza.

It’s easier to say, you don’t really care.

It's even easier not to think about any of this AI stuff at all.

Because it's hard, and the answers are not easy.

But I still hope, that maybe, you might…

What do you think about the issues raised here?

Let me know in the comments I’d love to know 🙂